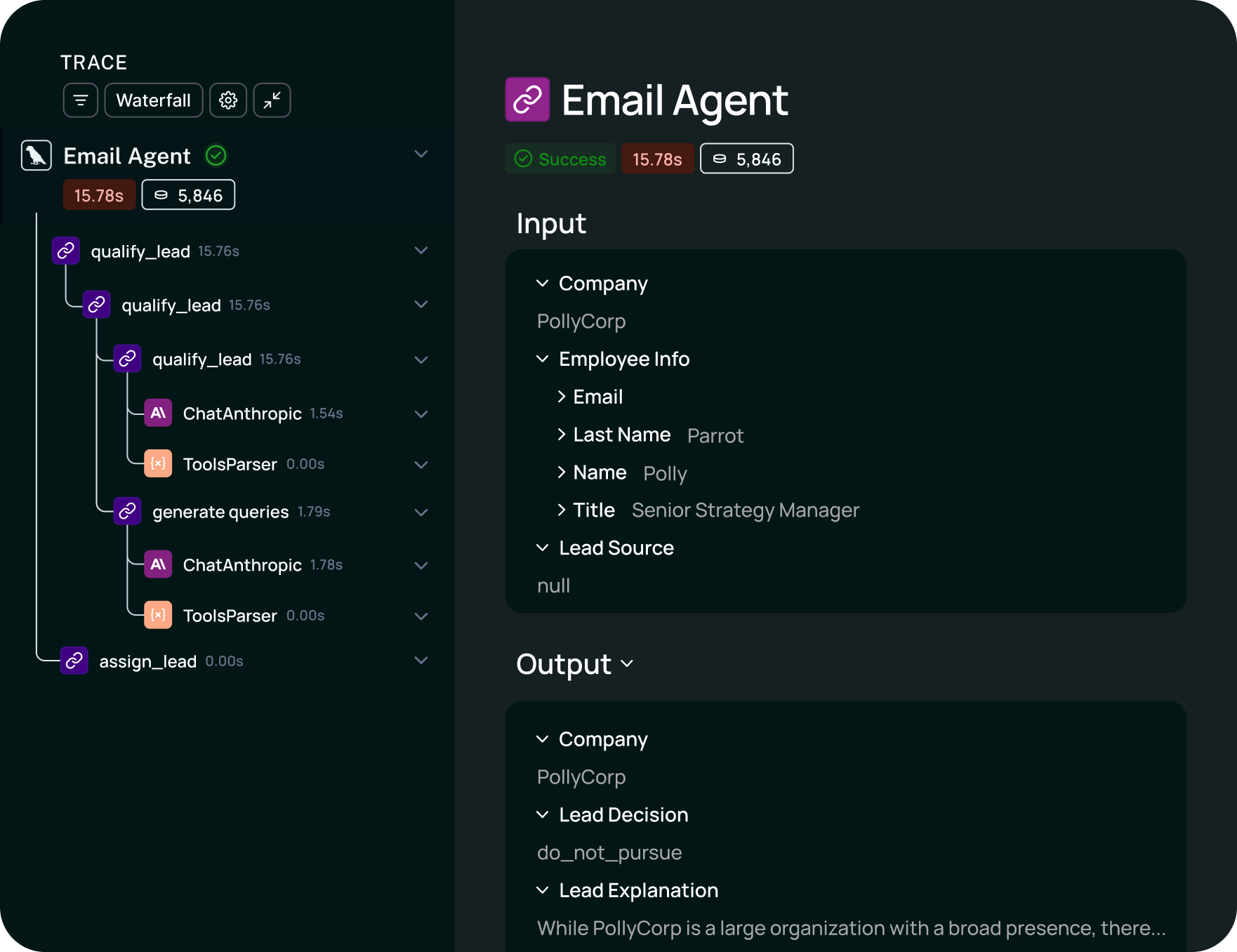

Find failures fast with agent tracing

Quickly debug and understand non-deterministic LLM app behavior with tracing. See what your agent is doing step by step —then fix issues to improve latency and response quality.

LangSmith Observability gives you complete visibility into agent behavior with tracing, real-time monitoring, alerting, and high-level insights into usage.

Helping top teams ship reliable agents

Quickly debug and understand non-deterministic LLM app behavior with tracing. See what your agent is doing step by step —then fix issues to improve latency and response quality.

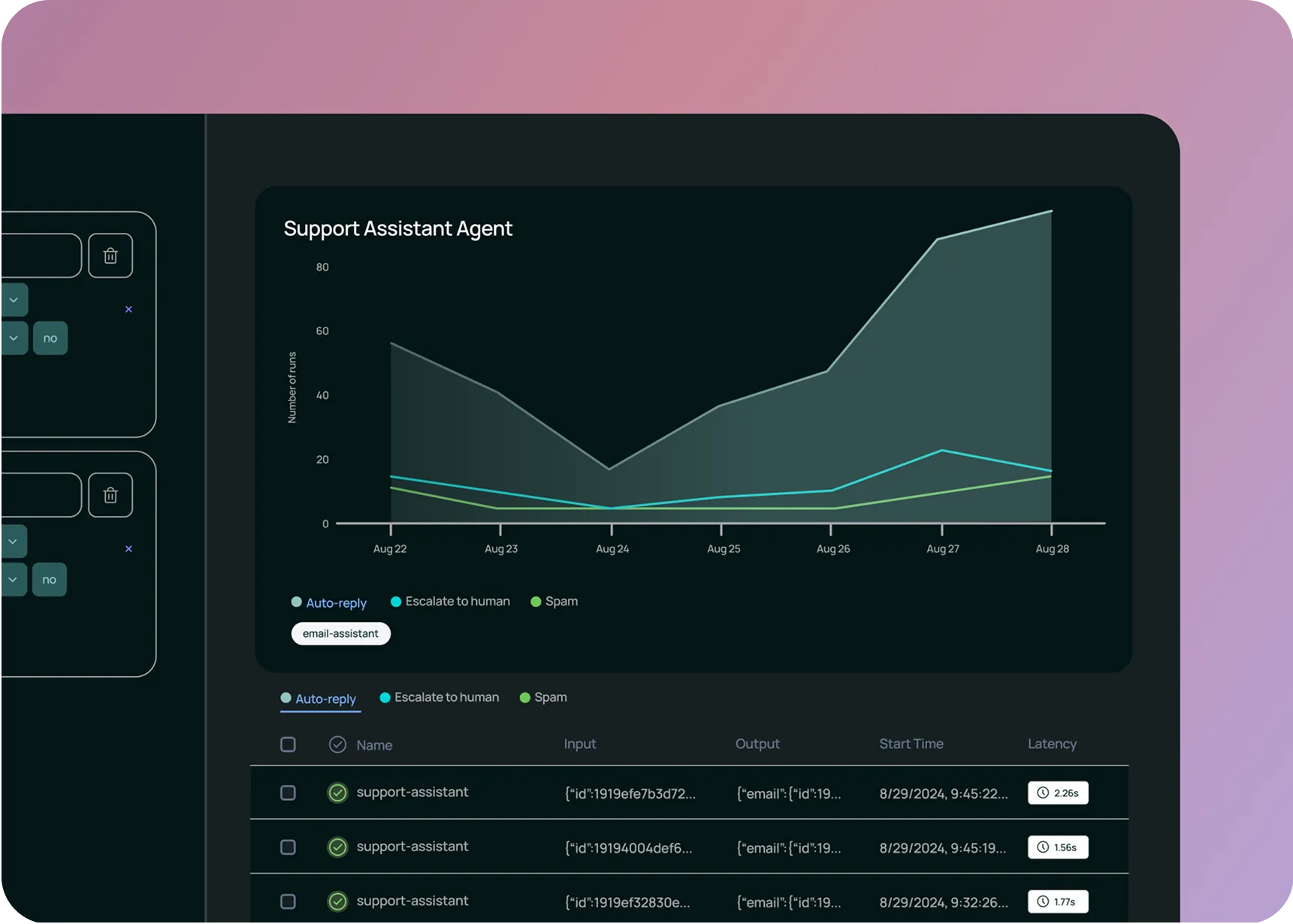

Track business-critical metrics like costs, latency, and response quality with live dashboards. Get alerts when issues happen and drill into the root cause.

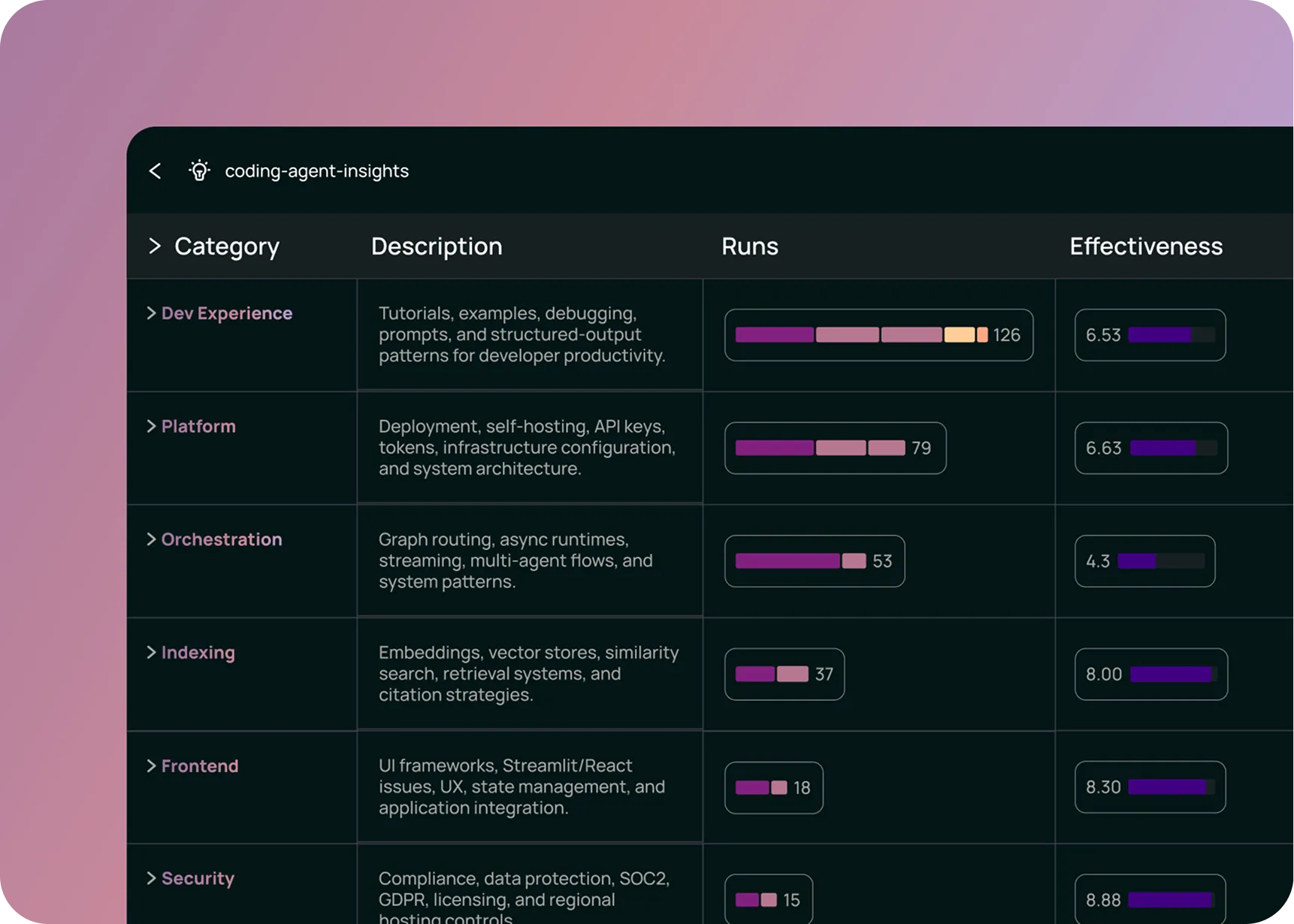

See clusters of similar conversations to understand what users actually want and quickly find all instances of similar problems to address systemic issues.

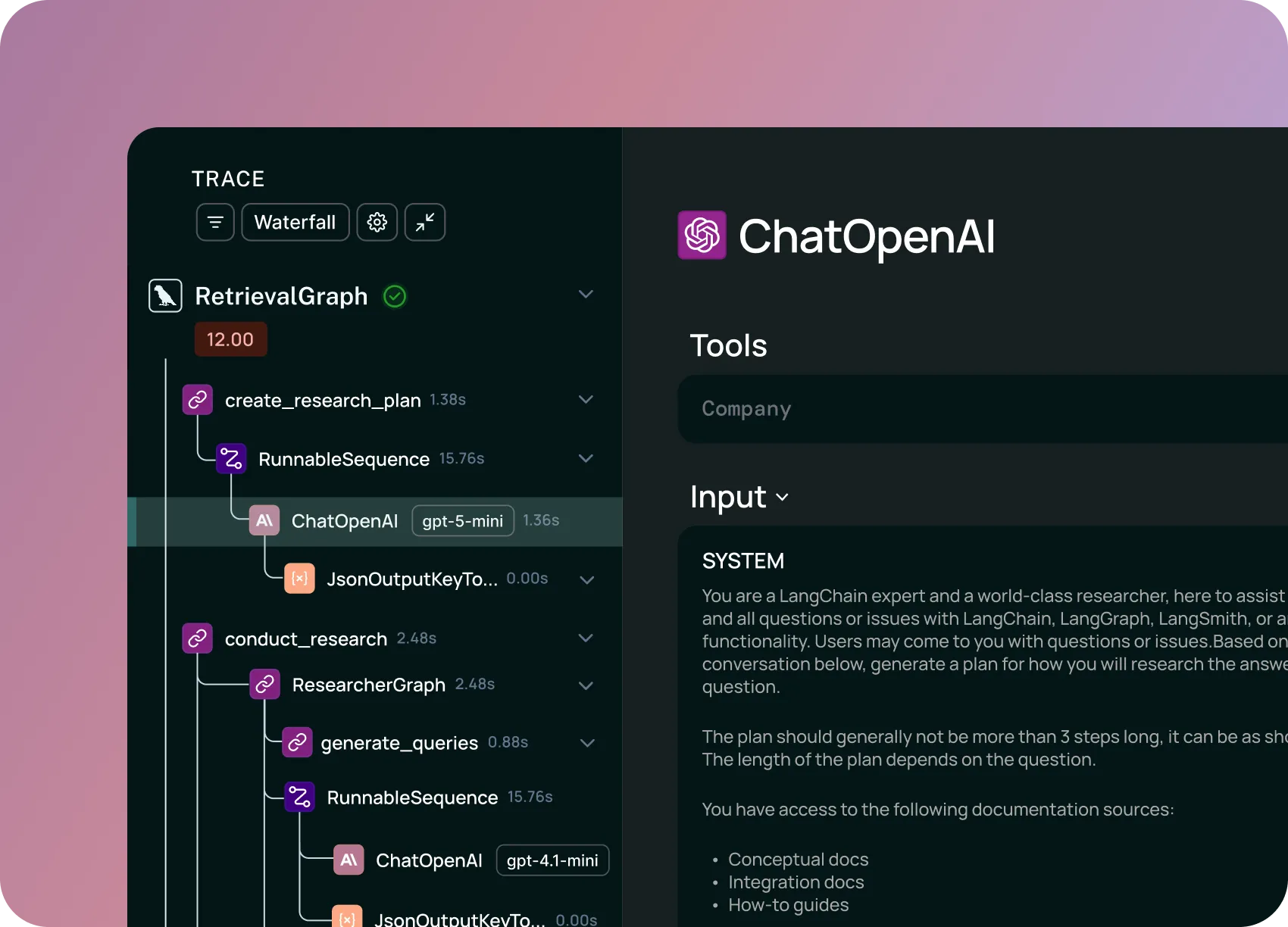

LangSmith works with any framework. If you’re already using LangChain or LangGraph, just set one environment variable to get started with tracing your AI application.

Teams need an LLM observability platform to understand how their AI applications behave in production. LLM observability platforms provide visibility into RAG pipelines, AI agent decisions, track model performance metrics like cost and latency, and help debug complex failures and hallucinations by showing the complete execution trace from end-to-end.

Custom dashboards track token usage, latency (P50, P99), error rates, cost breakdowns, and feedback scores. Configure alerts via webhooks or PagerDuty when metrics cross thresholds.

LangSmith works with any LLM framework. Trace applications built with OpenAI SDK, Anthropic SDK, Vercel AI SDK, LlamaIndex, or custom implementations, not just LangChain. OpenTelemetry support connects to existing pipelines. Learn more.

Yes. If your team has observability infrastructure on OpenTelemetry, LangSmith integrates with your existing pipelines. Send LangSmith trace data to your tools or ingest OTel data into LangSmith. See the docs.

Yes. Observability and Evaluation work well together but don't require each other. Start with tracing and monitoring, then add evals when ready. For all plan types, you'll get access to both and only pay for what you use.

Yes. LangSmith offers managed cloud, bring-your-own-cloud (BYOC), and self-hosted options for teams with data residency requirements. Contact us about the right option for your security needs. For more information, check out our documentation.

LangSmith cloud stores data in secure infrastructure. When using LangSmith hosted at smith.langchain.com, data is stored in GCP us-central-1. If you’re on the Enterprise plan, we can deliver LangSmith to run on your kubernetes cluster in AWS, GCP, or Azure so that data never leaves your environment. For more information, check out our documentation. For teams with compliance requirements, self-hosted and BYOC options let you control where your data lives.

No, LangSmith does not add any latency to your application. In the LangSmith SDK, there’s a callback handler that sends traces to a LangSmith trace collector which runs as an async, distributed process. Additionally, if LangSmith experiences an incident, your application performance will not be disrupted.

No. LangSmith does not use your data to train models. Your traces, prompts, and outputs remain private to your organization. See LangSmith Terms of Service for more information.

LangSmith has a free tier for development and small-scale production. Paid plans scale with trace volume. See our pricing page for details, or contact us for enterprise pricing.