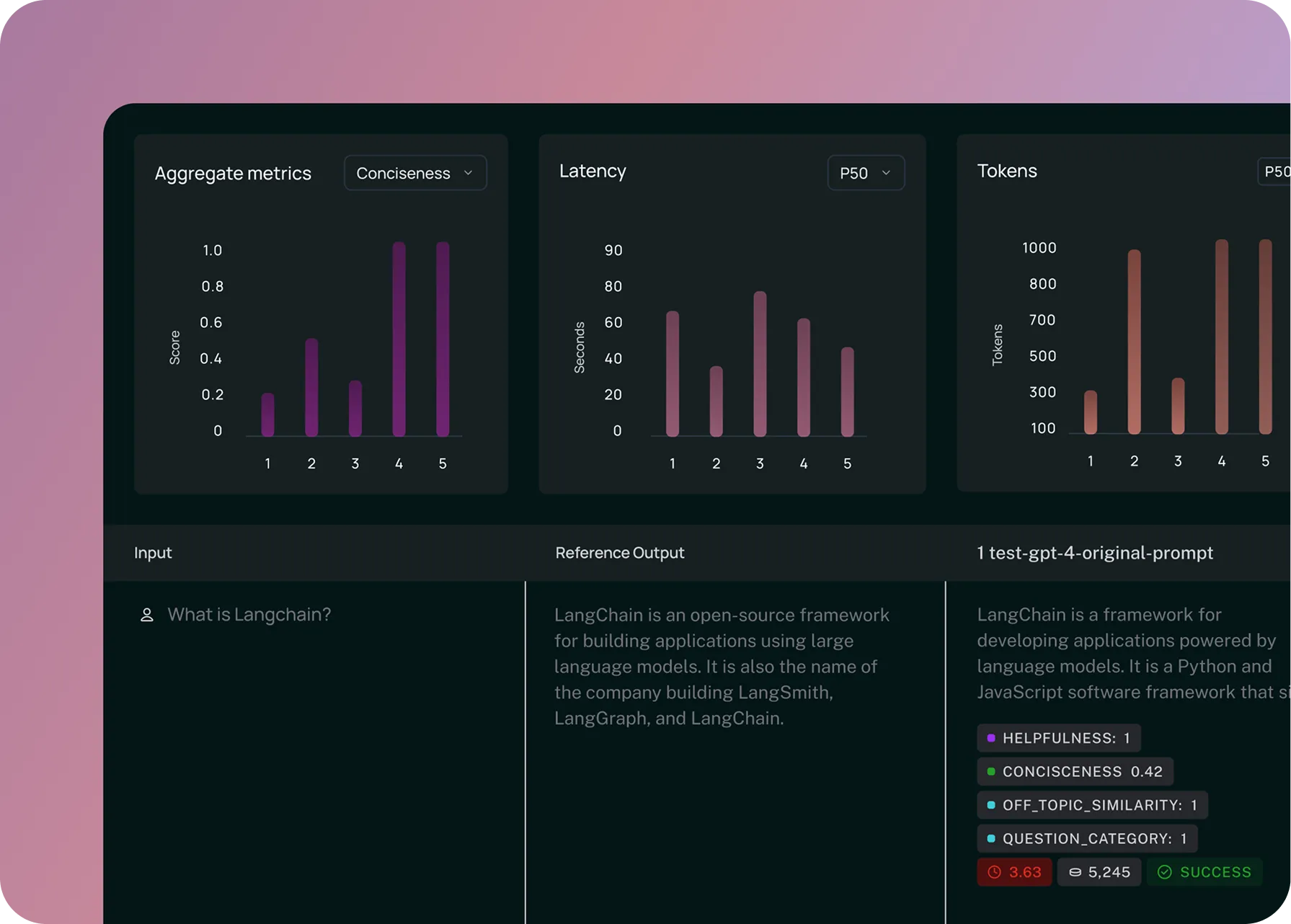

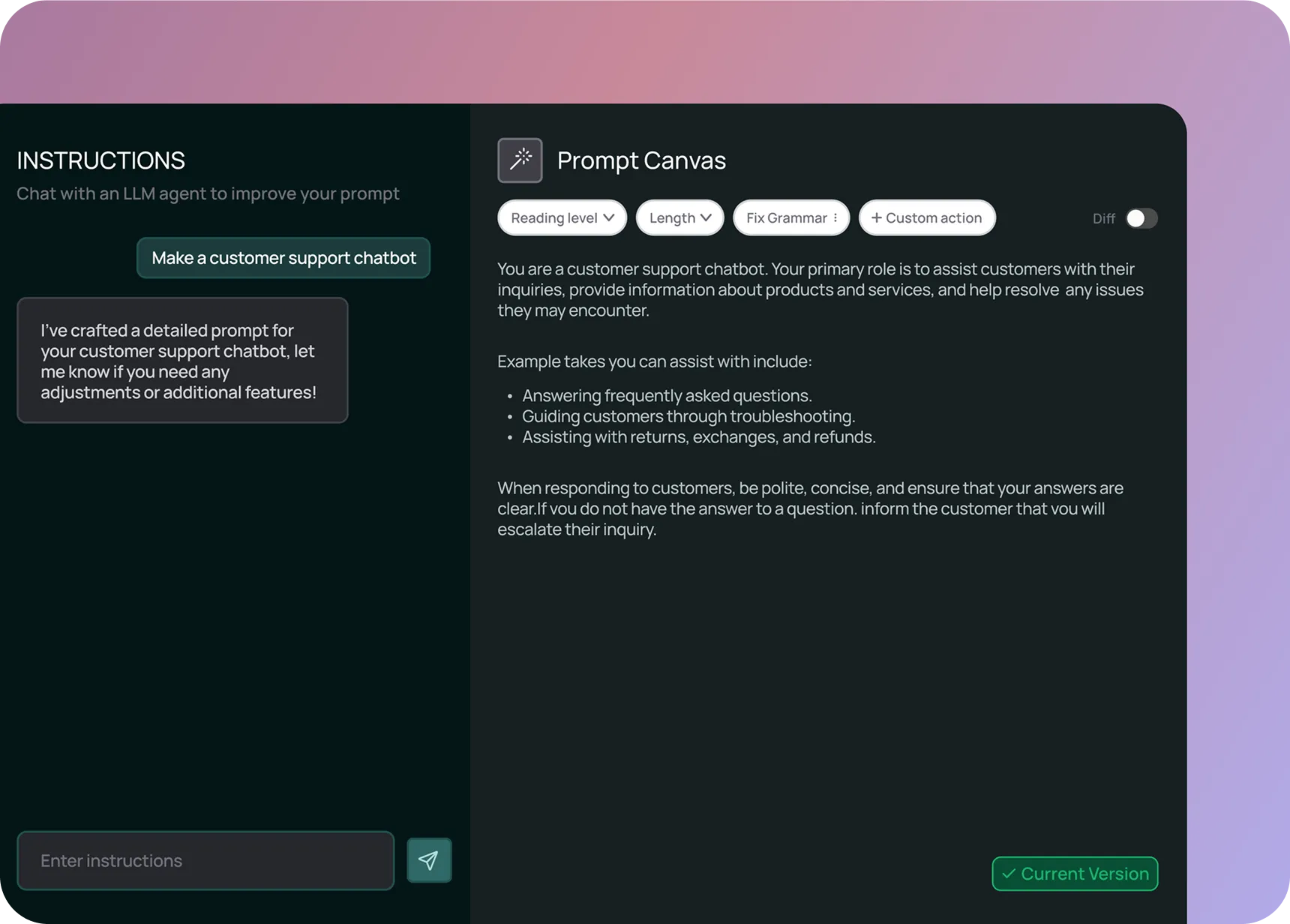

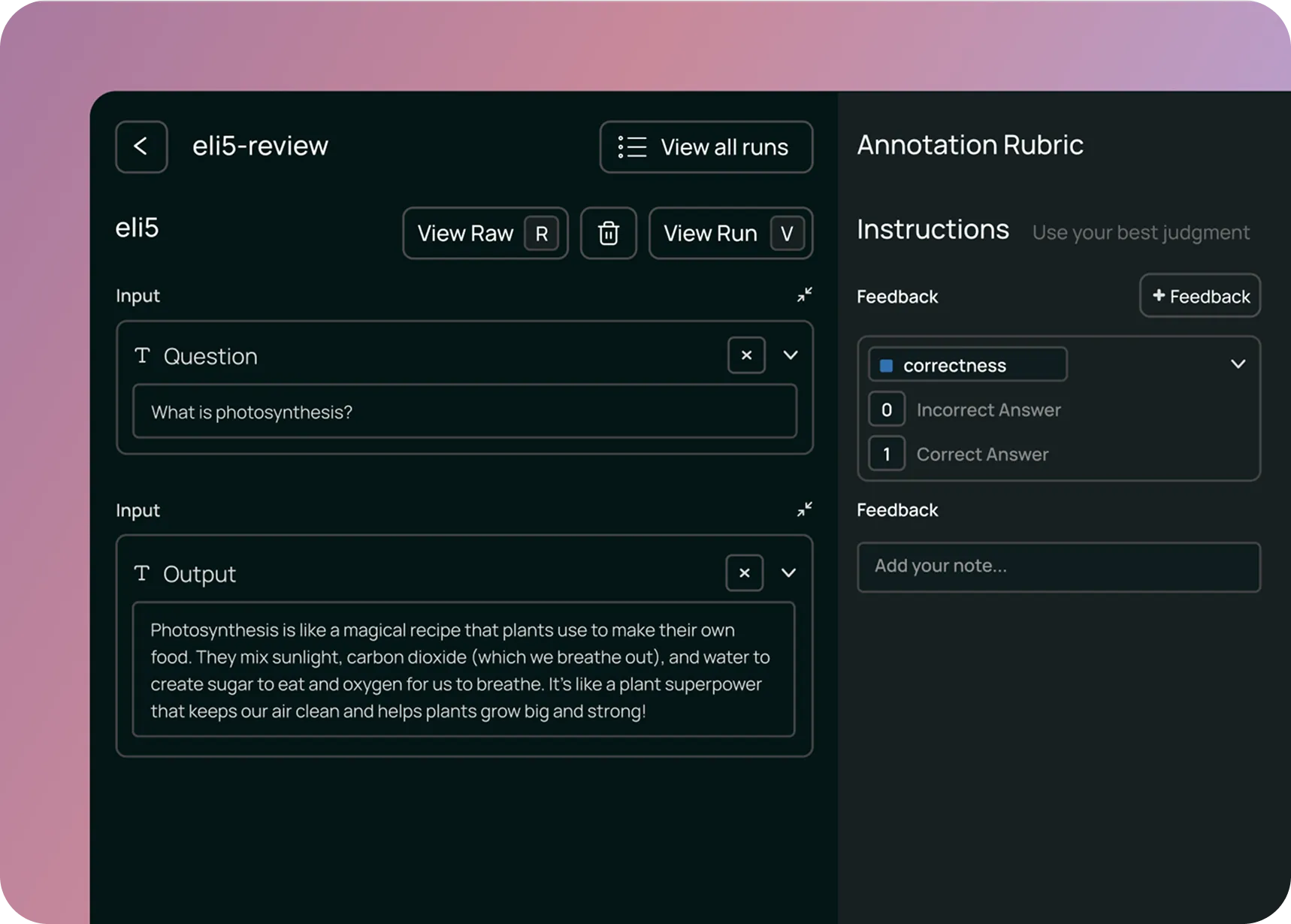

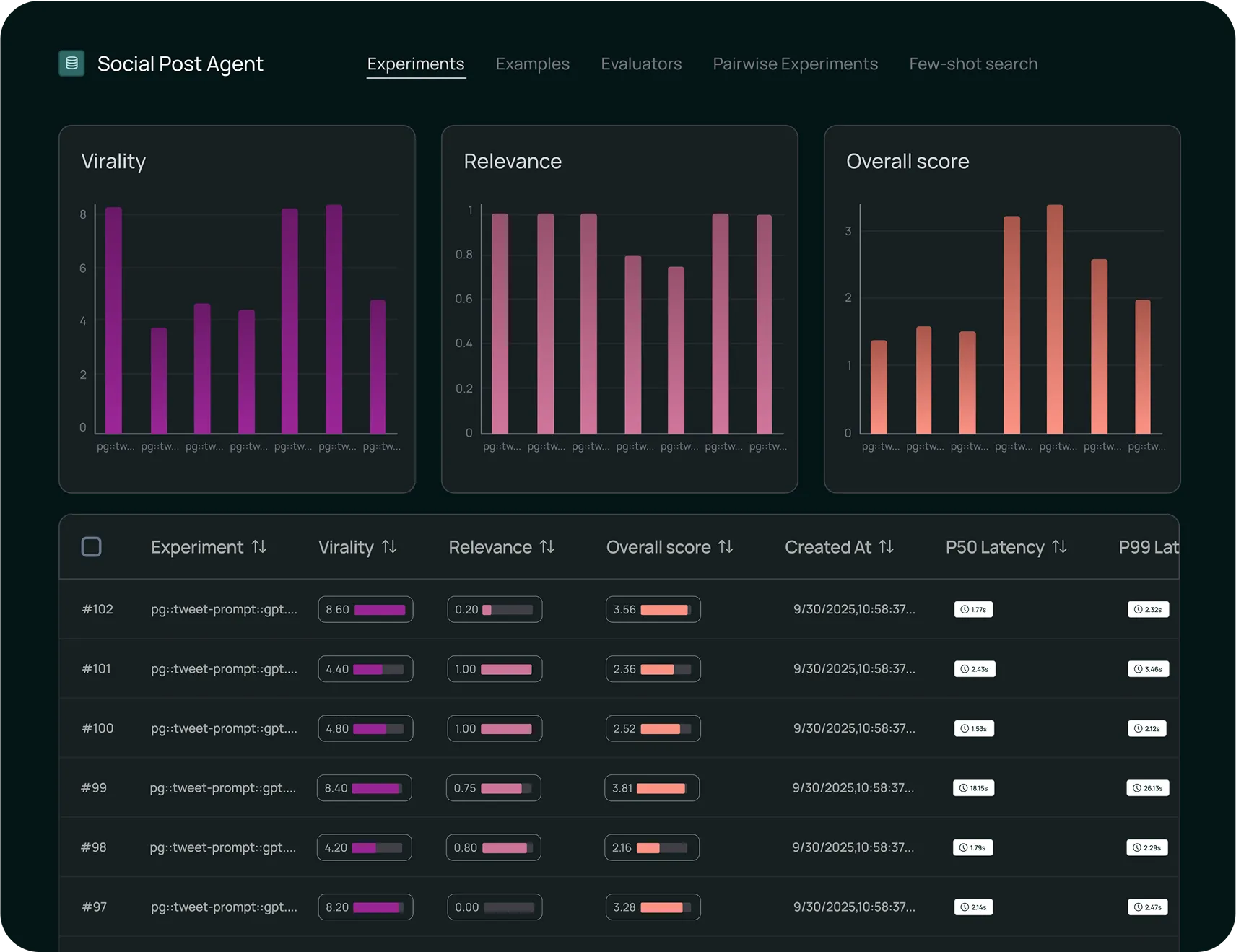

Evaluate your agent’s performance

Test with offline evaluations on datasets, or run online evaluations on production traffic. Score performance with automated evaluators — LLM-as-judge, code-based, or any custom logic— across criteria that matter to your business.

%201.svg)